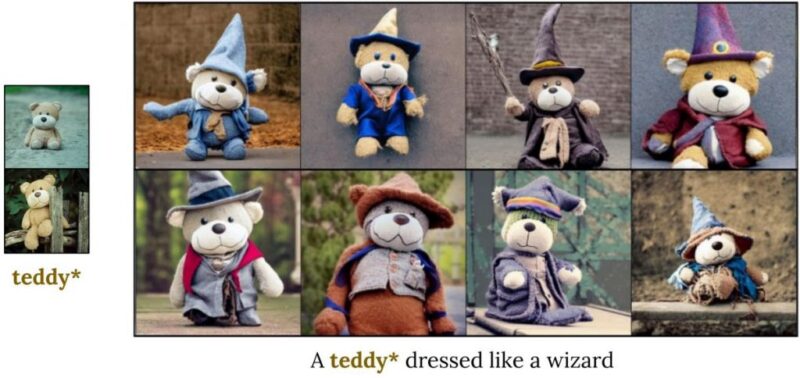

Nvidia recently showcased its neural network named “Perfusion generative,” notable for its compact size and rapid training capabilities. According to details provided by Nvidia, this neural network model requires a mere 100 kb of space, an impressive feat when compared to other models like Midjourney, which necessitates over 2 gigabytes of free storage.

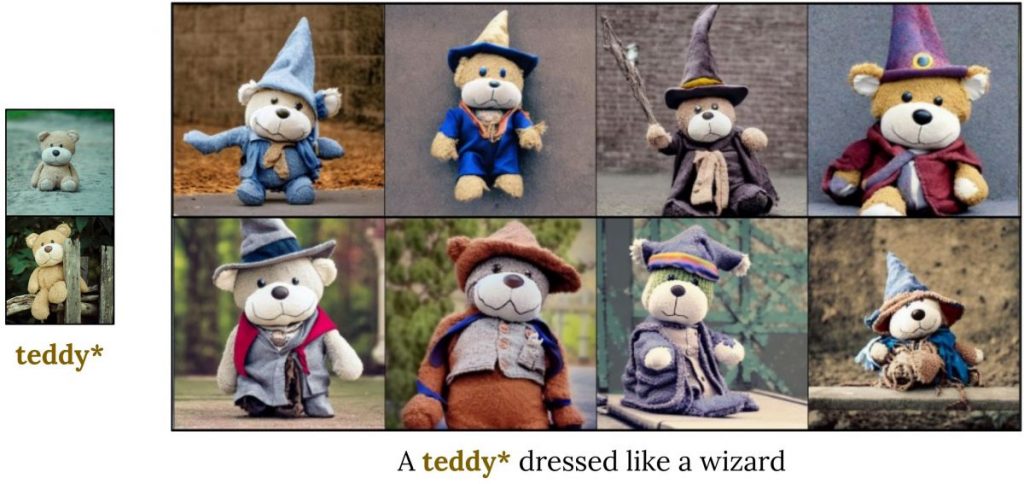

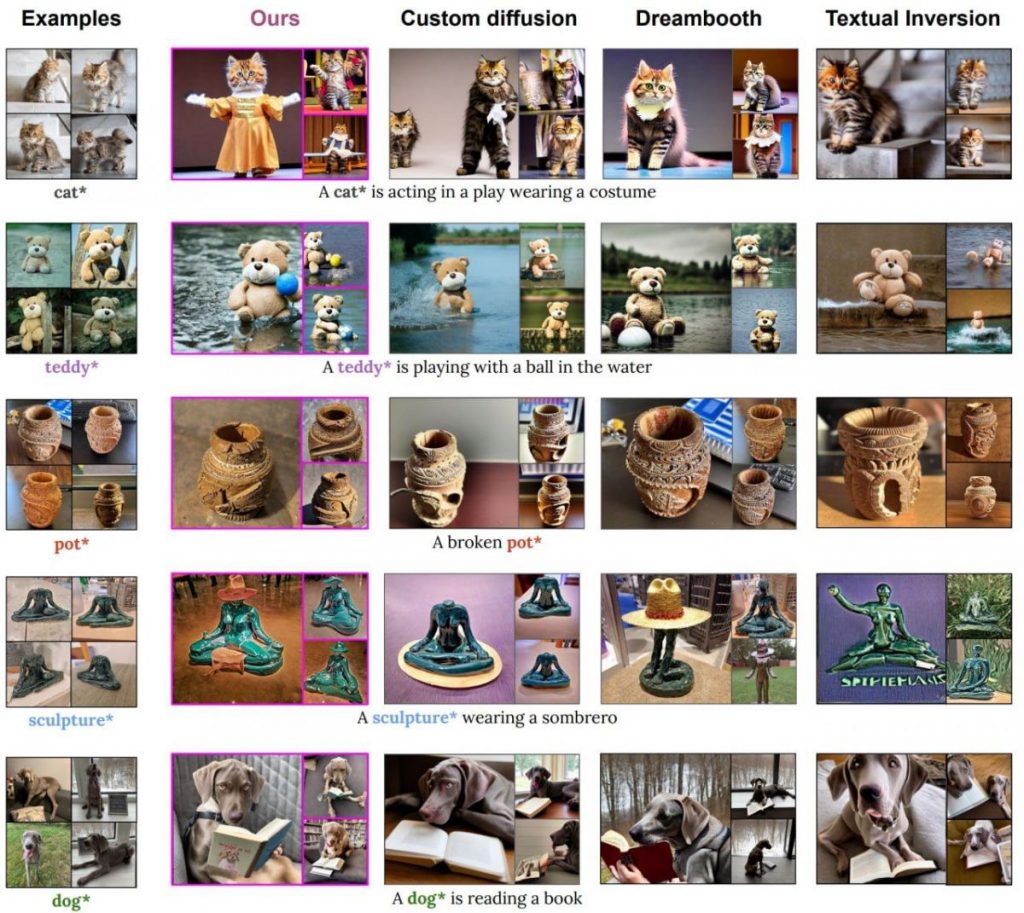

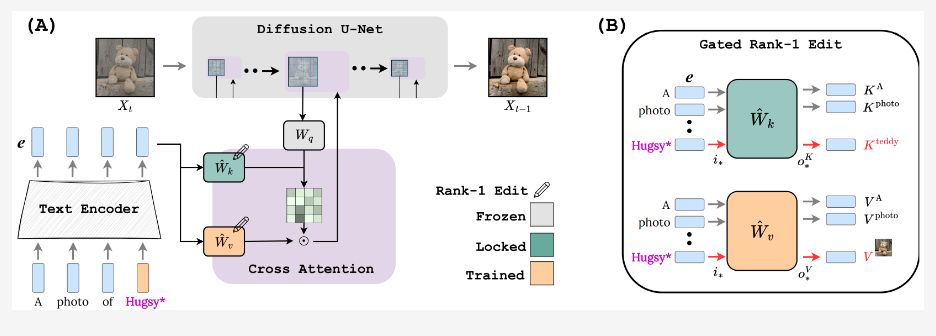

The key to Perfusion’s efficiency is a mechanism Nvidia has termed “Key-Locking.” This innovative feature enables the model to associate specific user requests with a broader category or ‘supercategory’. For instance, a request to produce a cat would prompt the model to align the term “cat” with the broader category “feline.” Once this alignment occurs, the model then processes additional details provided in the user’s text prompt. Such a method optimizes the algorithm, making the processing quicker.

Another advantage of the Perfusion model lies in its adaptability. Depending on user requirements, the model can be tailored to adhere strictly to a text prompt or be granted a degree of “creative freedom” in its outputs. This versatility ensures that the model can be finely tuned to generate outcomes ranging from precise to more general, based on specific user needs.

Nvidia has indicated plans to release the code in the future, allowing for a broader examination and understanding of this compact neural network’s potential.

Source: mPost