Dall-E and Stable Diffusion were only the beginning. As generative AI systems proliferate and companies work to differentiate their offerings from those of their competitors, chatbots across the internet are gaining the power to edit images — as well as create them — with the likes of Shutterstock and Adobe leading the way. But with those new AI-empowered capabilities come familiar pitfalls, like the unauthorized manipulation of, or outright theft of, existing online artwork and images. Watermarking techniques can help mitigate the latter, while the new “PhotoGuard” technique developed by MIT CSAIL could help prevent the former.

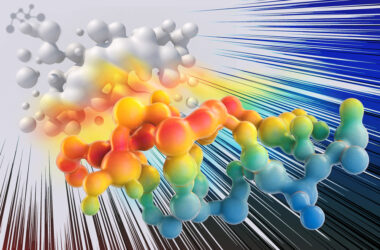

PhotoGuard works by altering select pixels in an image such that they will disrupt an AI’s ability to understand what the image is. Those “perturbations,” as the research team refers to them, are invisible to the human eye but easily readable by machines. The “encoder” attack method of introducing these artifacts targets the algorithmic model’s latent representation of the target image — the complex mathematics that describes the position and color of every pixel in an image — essentially preventing the AI from understanding what it is looking at.

The more advanced, and computationally intensive, “diffusion” attack method camouflages an image as a different image in the eyes of the AI. It will define a target image and optimize the perturbations in its image so as to resemble its target. Any edits that an AI tries to make on these “immunized” images will be applies to the fake “target” images resulting in an unrealistic looking generated image.

“”The encoder attack makes the model think that the input image (to be edited) is some other image (e.g. a gray image),” MIT doctorate student and lead author of the paper, Hadi Salman, told Engadget. “Whereas the diffusion attack forces the diffusion model to make edits towards some target image (which can also be some grey or random image).” The technique isn’t foolproof, malicious actors could work to reverse engineer the protected image potentially by adding digital noise, cropping or flipping the picture.

“A collaborative approach involving model developers, social media platforms, and policymakers presents a robust defense against unauthorized image manipulation. Working on this pressing issue is of paramount importance today,” Salman said in a release. “And while I am glad to contribute towards this solution, much work is needed to make this protection practical. Companies that develop these models need to invest in engineering robust immunizations against the possible threats posed by these AI tools.”

Source: Engadget